How I Ran a 3:48 Marathon with AI

With some help from AI apps, I achieved a 25 minute personal best.

I ran a personal best 3:48 at last weekend’s Marine Corps Marathon with the help of some AI applications. It was my third marathon and first self-coached, using a traditional Running World 3:30 marathon training plan and three AI tools from three different apps—Claude.ai, Garmin Connect, and Strava Athlete Intelligence—to help guide my decision-making.

Before diving into the AI apps, let me offer a little context. My goal for this marathon training cycle was to build more endurance and, at a minimum, run the entire marathon and break 4 hours total time. My first two marathons saw me bonk at mile 18 in the Chicago Marathon (4:13) and after mile 23 of the Coastal Delaware Marathon (4:15, heat stroke symptoms caused me to walk it out).

I modified the original Running World plan throughout the training cycle based on age (52), performance, life events, and physical response to training. Other modifications included adding a addition five weeks to build endurance with a 50-mile average for two months, including five 20-22 mile runs.

Me running the Blue Mile, which honors Marines who made the ultimate sacrifice. Image by Joe Newman.

Now that the final result is in, let’s review how the AI apps performed. Both Connect and Athlete Intelligence offer athlete performance analytics engines using either automated averaging or machine learning. Strava layers generative AI on top of its analytics to provide direct feedback. Claude is Anthropic’s LLM-based answer engine.

Garmin Connect

Garmin’s AI comes in several mini analytics apps via its Connect platform. It provides:

Race Predictor for paces, averaging your performances from the past 28 days

Recovery meter, which tells you how long you need to rest for full capability

Garmin Coach, which provides training recommendations to achieve a goal, and adapts based on your current fatigue and rest

Garmin Coach was so bad that I turned it off within one week of buying my watch. It misses what you are trying to achieve, in my opinion. For example, when I was building mile volume in my marathon training, it kept telling me I was overtraining. Duh. And often, I didn’t feel tired or strained. So much of running is psychological, I do not want my watch barking at me because it doesn’t understand my goals.

Garmin analytics and recommendations works off your heart rate performance, including its Recovery meter. I found Recovery rest recommendations served as a barometer, but they were not literal or consistently accurate.

In many cases, Recovery suggestions for rest matched how I was feeling. However, it may have suggested 40 hours until I was ready to run, but my body and plan would have differed. And there were other times when it recommended less rest than I needed.

In the end, the runner’s body is the ultimate tell. If I were so sore that I struggled to achieve performance goals, I would listen to my body, regardless of what the watch or other apps told me.

Garmin Race Predictor (pictured above) works off your 28-day running pace average, which was helpful for gauging general fitness. I have found Connect overestimates my performance by as much as 10 minutes. The estimates represent a potential figure rather than an accurate metric because it only uses heart rate and pace data. For example, it does not consider humidity, fatigue, tapers, or other aspects of a marathon training cycle.

The Race Predictor was accurate with a one minute underestimate of my September 5K (21:35). The day before the Marine Corps Marathon, Garmin Connect predicted 3:36:10. While my MMC time was 3:48, it was for 26.6 miles due to weaving through the crowd, my 26.2 mile time was 3:44:45, so Garmin was accurate within nine minutes.

After the race, Garmin Race Predictor now says 3:36:07. To be candid, one day later I felt pretty good, so I probably did have a little gas left in the tank.

Strava Athlete Intelligence

Before diving into Athlete Intelligence, Strava uses your overall performance metrics and general strain from the past three weeks to measure weekly fitness progress. This analytics program is interesting because it helps you measure weekly strain. I could see the long runs were getting progressively easier based on impact.

The Fitness measure did rank me at a much lower level compared to the two prior training cycles. This is clearly off. While running faster, I am stronger and more fit after my third training cycle, and I PRed at the 400M, 800M, 1 Mile, 2 Mile, 5K, 15K, 10 Mile and Half Marathon distances during this training cycle. The lower scores are probably because of different training runs focused on longer intervals at marathon and half marathon paces with less dramatic paces and corresponding heart rate surges.

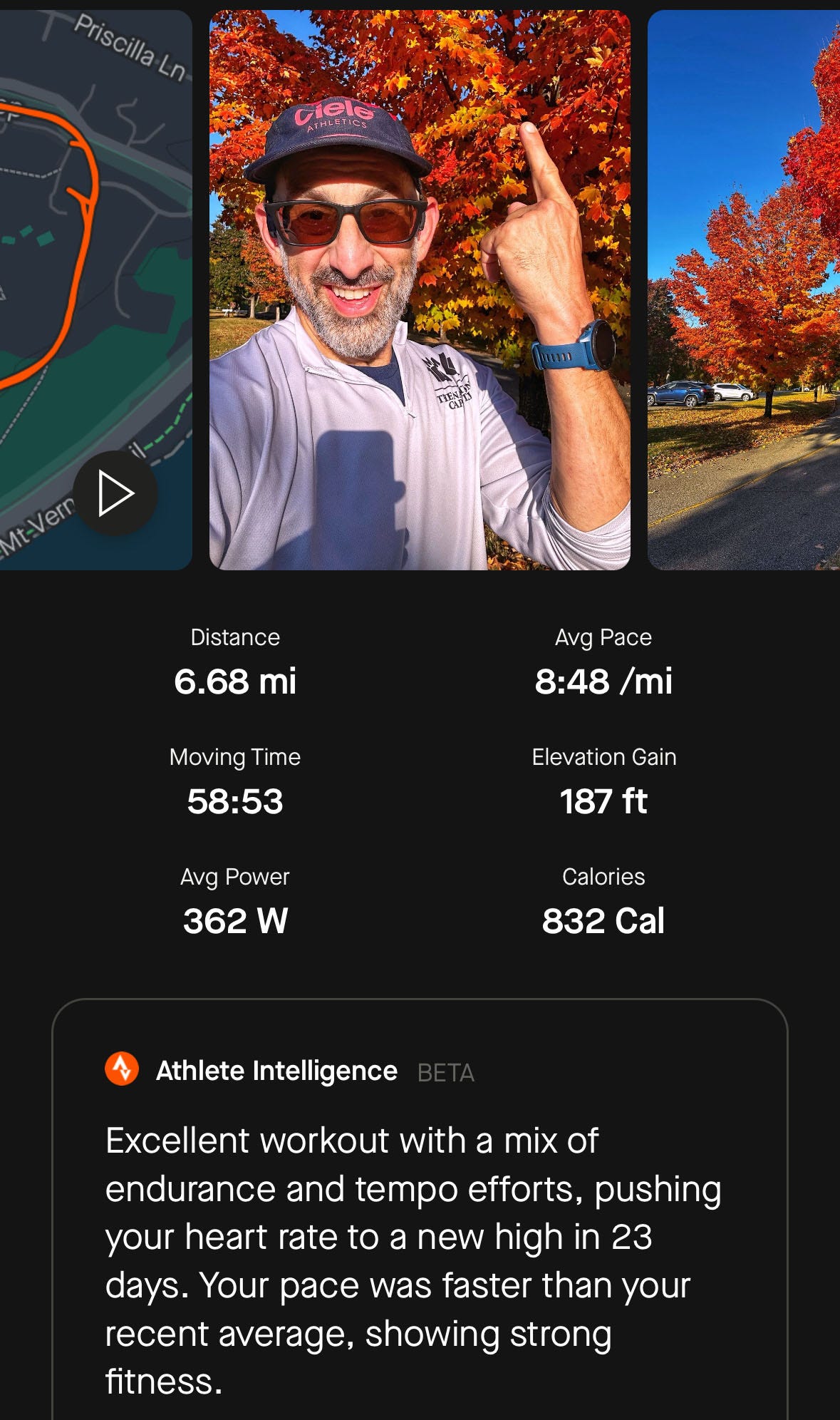

Strava Intelligence offered a generative AI commentary analyzing your performances against your efforts from the past month. The ML/LLM combo uses a running-specific vocabulary and training data. Further, it does read your commentary and notes and uses them to inform its response.

However, I find v1 to be off. It often just parrots your comments and only indicates you have gone faster or slower than your average pace for the past month. It’s another metric that cannot tell if you are hitting your goals. For example, you may be in a recovery cycle and may not want to go faster. Athlete Intelligence may be a negative metric telling you you are underperforming.

Still, Athlete Intelligence is one of the first generative AIs I have seen for running specific tasks. Let’s see if they can evolve it to become more of an AI agent or assistant to help the coach or athlete fine-tune their training.

Claude

Claude is an answer engine service provided by Anthropic that competes with ChatGPT. When I had general questions, wanted to research possible solutions for specific problems, or wanted to weigh training options, I turned to Claude. While I also have ChatGPT Pro and obviously can query Google Gemini as part of a search, I found Claude’s answers were better able to respond to my queries that included more specific information, including running performance, physical conditions, and age.

I used Claude helped me plan out my extra weeks of marathon training, resolve questions about performance, and adapt my plan as life scenarios evolved. For example, because I ended up taking a vacation in Vermont during the week of September 30. Because I was not going to be able to weight train, Claude suggested using technical hikes and moderate to hard trail runs to replace the effort.

In a couple of instances, I was fatigued after the Vermont trip and started to see my run pace drop significantly. After putting my prior three weeks of run and hike data, general fatigue symptoms, and age, Claude strongly suggested two days of recovery time. No matter how I changed my prompt, Claude suggested sticking to the extra rest. So I did.

Comparitively speaking, Claude did a much better job of analyzing my run times and predicting a marathon finish in the 3:40-3:45 range. If you consider my actual race pace and mileage, at 26.2, I was at 3:44:45 with an 8:35 average. Spot on. Claude also provided reasons for its answers, which were sound and matched what a coach and running sites’ individual posts suggest. When I plugged the same data into ChatGPT, I got a crazy 5+ hour prediction.

Conclusion

All in all, the three apps offered helpful insights and advisement, depending on their design. Some could use more improvement, all of them served well as tools that informed me, the self-coached runner. Of the three I found Claude to be most useful as an answer-engine that helped me reduce research time. Connect and Strava both have value as analytics engines, but their suggestions or commentary were hit or miss. Both sets of AI need deeper data training, in my opinion.

Like other sectors and discussions of generative and predictive AI apps, all of these tools are not capable of decision-making. In the specific context, generative running AI apps for consumers — while domain-specific AI — are not capable coaches. At least not yet. But they are informative and can help runners make good decisions.

For organizations looking to implement customer facing AI apps, my biggest suggestion: Communicate clearly about what the apps is capable of, and what to expect from the average user’s experience. Further, overtly state that said app best serves end-users as personal assistant with reseach, analytics and/or whatever the actual output is.