The market and businesses are coming to terms with the generative AI boom, and what its algorithms can and cannot achieve. I cannot help but share three generative AI ironies that have emerged. Perhaps you are seeing the same things or different oddities. If so, please do share them.

1) Shadow AI Bites Slow Movers

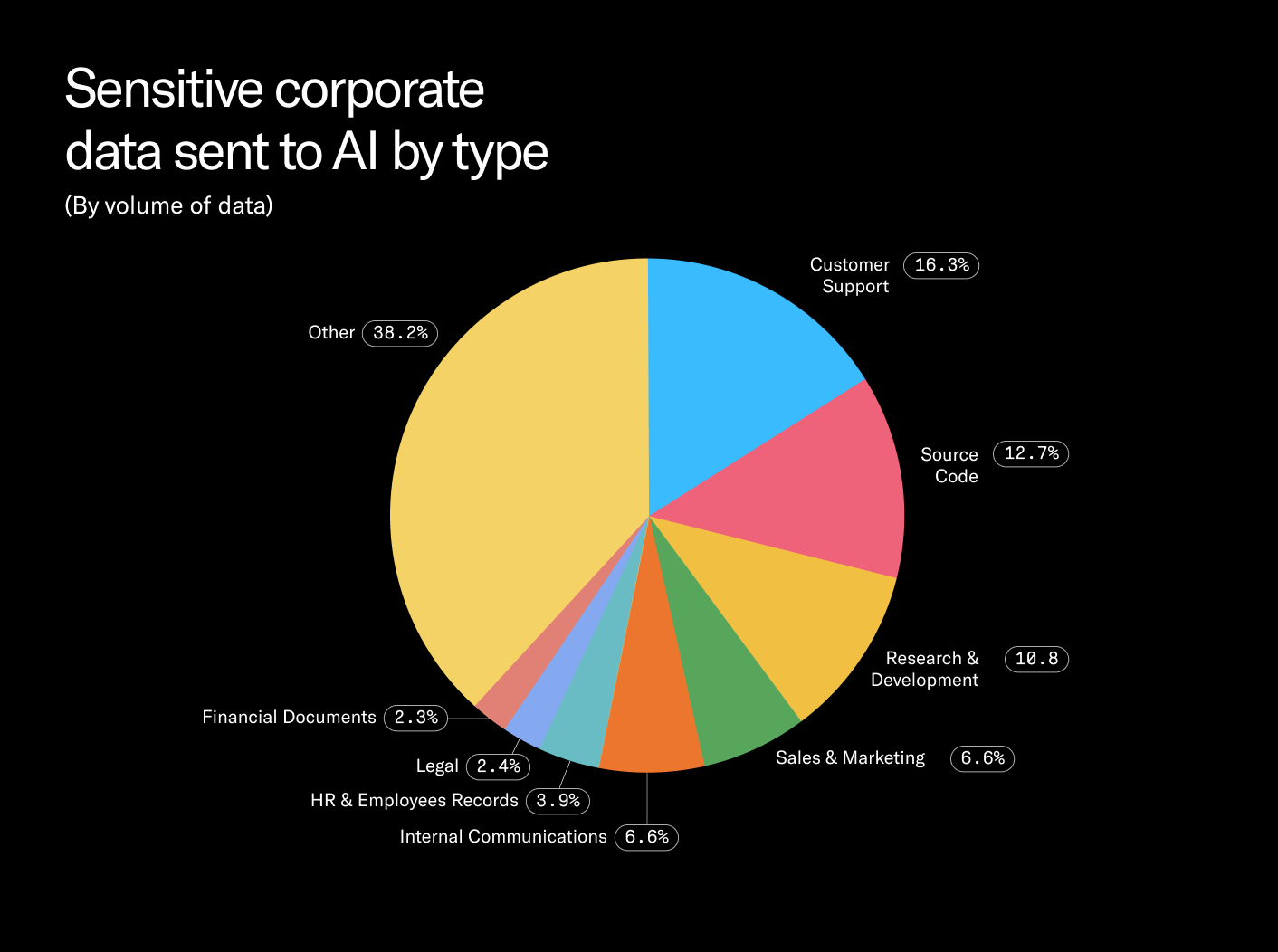

Cyberhaven detailed the use of Shadow AI in enterprises.

Companies and organizations that have taken a hard “no” approach to employees using generative AI face an uncomfortable truth: Their employees already use generative AI apps on their phones. And they are probably training those algorithms with work-related prompts and corporate data, whoops!

Just like the cloud and social media eras, many CIO, CTO, CISO, and COO organizations have taken the draconian “zero or severely limited” approach to AI. Unfortunately, the above Cyberhaven chart shows this has only led to employees leaking data to LLMs. If executives had been more proactive, they could have put at least enterprise accounts into place to avoid training LLMs on their industrial secrets.

Technology executives concerned by AI security — and there is good reason for that — then it’s time to ante up on private instances and enterprise accounts to better control their data. The good news? It seems more enterprises have learned the lesson, and are embracing generative AI rather than letting their employees lead.

2) Bizarre Words Become Buzzwords Thanks to AI

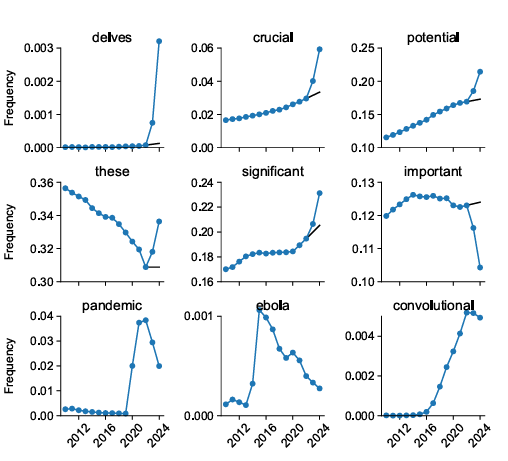

Ars Technica covered the rise of new AI buzzwords.

Efforts to prevent AI writing in academic, editorial and scientific environments are becoming increasingly commonplace. But that hasn’t succeeded in stopping people from using AI writing tools. An analysis of scientific papers shows the rise of somewhat arcane words in the copy, including “delves,” “crucial,” “significant,” and “convolutional.”

Marker words like these are signals that an AI may have been used to help create some of the text. Now that we are aware of these new buzzwords we can more easily detect AI usage. And then what? Are we going to wholesale disqualify submissions because someone used Grammarly Go, Writer, or just an LLM? Is a buzzword less buzzy because AIs started the fire?

I am sorry, I just don’t get it. If people feel AI tools help communicate on a higher level, then they should use them. Publishers, business managers, and educators need to move beyond a simplistic yes/no view of AI writing to a substantive review of the content. Questions that matter with AI-generated content:

Does the content represent the writer’s thinking or is it just an AI summary of ideas?

Is the content high quality and/or useful?

Are the statements factual and evidence-based?

Will the text violate bias or ethics norms?

A human should review important content for these matters rather just categorically dismiss writing based on development methods. Of course, this would require them to delve into the work, pun intended. Otherwise publishers and others who care about quality writing and digital media will find themselves battling shadow AI content, much like their IT brethren.

3)The New Luddite Movement Is Sad

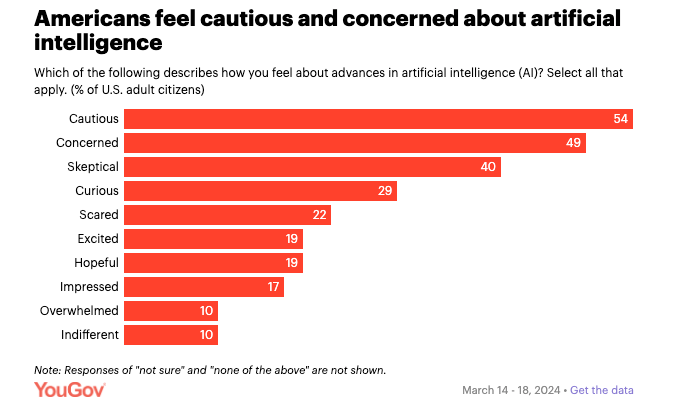

YouGov discussed Americans negative views of AI last spring.

Anyone following AI has seen that a significant minority of business people and the general public oppose AI’s advancement. It is estimated that at least one-third of people in business and in the general public are opposed to or are skeptical of AI’s impact. The anti-AI movement is sad because it is fueled by the technology sector’s marketing.

This new generation of Luddites is fueled by dramatic statements and fear about AI’s impact. A Luddite is someone who opposes technological advancement or progress. The Industrial Revolution and its impacts on the textile industry spawned the movement. Of course, there should be resistance as there is to any new technological movement, but it should not be a significant minority of the population, nor should it be so charged.

Silicon Valley startups continue to botch marketing AI technologies to businesses and the general public. AI startups have been plagued by mismatched product marketing, ethical vacuums, legal suits surrounding training data, hallucinations, insensitive approaches to job displacement, volatile executive management changes, overhyped promises, etcetera. Of course, these mistakes fueled backlash.

Using the term AI itself is a mistake. The cultural baggage surrounding the word, as best portrayed from HAL 9000 to the most recent Mission Impossible movie, is just toxic. Candidly, AI is far from a sentient being, that’s even true of what some startups are now marketing their next-generation technology, Advanced General Intelligence or AGI. In fact, AI’s cognitive capabilities are slowing down.

In many ways, tech companies have been their own worst enemies. That’s just sad. And ironic.