The Role of Trust in an Era of AI Bots

Permission marketing is not new. But the principles of opt-in marketing are often abused. AI will antagonize rising tensions about privacy…

Permission marketing is not new. But the principles of opt-in marketing are often abused. AI will antagonize rising tensions about privacy and personal data usage.

Before discussing AI’s impact on personal data usage, it’s helpful to review the current state of privacy ethics. The Cambridge Analytica crisis revolved around the exploitation of people’s data, particularly their friend networks, were exploited. In actuality, their use of the free social network Facebook came with an opt-in that allowed Facebook to sell their user data. The small print four paragraphs down in the user policy escapes most users.

That being said, the use of data was egregious, destroying trust on a widespread basis. While the extreme example offended, thousands of unknown abuses occur, too; they just haven’t been exposed.

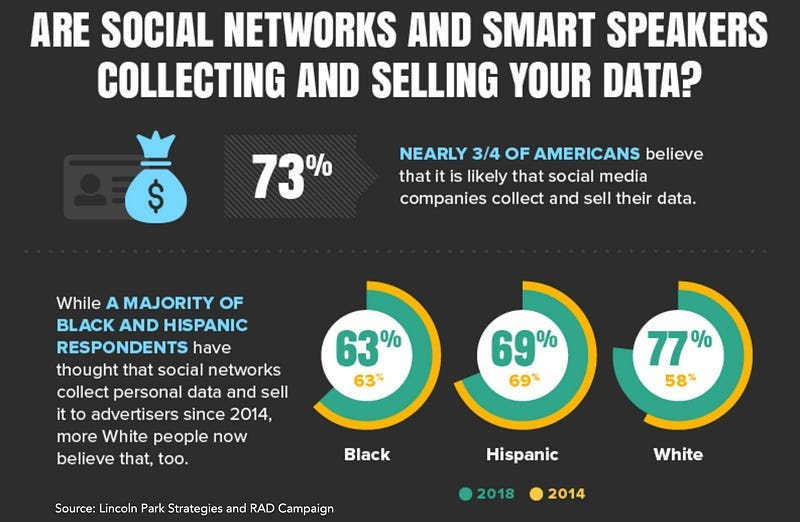

With social networks marketers continually abusing permission-based data, most consumers no longer trust anything they read or see online. A May 2018 poll conducted by Lincoln Park Strategies and RAD Campaign revealed some astonishing data about Americans online data usage:

73% believe social media companies are collecting and selling their data

74% don’t read or skim social network terms of service

75% of Americans think their data is getting tracked by cookies without their consent

58% believe in-home smart speakers collect their data

The new European regulations on data usage by companies — GDPR — attempts to rectify some of the issues by forcing companies doing business in Europe to get full opt-in to collect data and notify users of data breaches. Many American companies comply with GDPR to protect international customers using prompts on their sites to allow cookies.

The Lincoln Park Strategies/RAD Campaign study demonstrates that most Americans will blow through these opt-ins to use services regardless of data usage. However, they assume that their data is being used to exploit them.

AI only complicates the matter with bots getting deployed to find mirror audiences and implement a wide array of automated communications services — from email and texts to pop-ups and chatbots — to convert customers. Further customer segments matched against more massive databases of lookalike customers to generate new business leads.

Because customer data is widespread and used online through casual opt-in policies, many people receive unwanted communications from companies they never interacted with. Usually, this takes place in the form of advertisement and spam emails, but can include other methods like robocalls. Thanks to AI, we’re seeing a dramatic increase in this kind of activity.

How AI Will Thrust a Moral Mirror on Companies

The dangers are multifold from AI usage in this manner. Data and developer biases that may prevent some customer segments from enjoying services will continue to create a moral and ethical crisis for companies. By far, unintended data and algorithm biases can paint brands as biased or politically motivated.

Further, companies will bear the brunt of consumer angst. It doesn’t matter that they only deployed machine learning programs to better their business. Customers assume they vet their data and AI usage, and if not, then the brand breaks trust.

Most marketers integrate machine learning programs offered by third parties based on vendor promises and past performance. When purchasing a database or an algorithm, a marketing strategist assumes its clean. Consumers will not allow blame for a resulting problem to be placed on a third party tech company, even if it is Google or Facebook.

The Forrester Report “The Ethics Of AI: How To Avoid Harmful Bias And Discrimination” examined the challenges faced by bias and made ethics conclusions that every marketer should know. Governments cannot be relied on to create effective regulations to protect online data. Instead, consumers will look to corporations to become good or bad arbiters of data. As a result, when a data breach, bias, or another form of data exploitation occurs because of AI, the brand gets tarnished.

Forrester Ethics of AI report author Brandon Purcell writes, “Just as FICO isn’t held accountable when a consumer questions a bank’s decision to deny them credit, so Amazon, Google, and other providers of trained machine learning models will not be held accountable for how other companies use their models. Instead, the companies themselves, as the integrators of these models, will bear the consequences of unethical practices.”

Vetting AI and data solutions to ensure sound implementation will require marketing departments to deepen their vetting processes. In many ways, while a vendor may have a good answer, the brand itself will need to check the integrity of the algorithm against bias. That requires brands to possess or have access to quality data scientists for quality assurance.

Some may see this as a personal bias, but after working in marketing for more than 20 years, I sincerely doubt that a vast majority of brands will do this. While we as a profession do a great job of talking about ethics and best practices, most do not or can not marshal the internal resources, buy-in, or knowledge to protect their companies from machine learning produced transgressions.

As a result, many brands will have what Brandon Purcell calls a “moral mirror” thrust upon them, showing them and their customers the unconscious biases of their businesses. Unfortunately, these challenges will be aired on the public stage, almost certainly creating a crisis in the worst instances. The moral mirror will become a Black Mirror.

Marketers and their executive teams should revisit their crisis PR plans. Specifically, marketers should create guidelines for an AI-related adverse event — specifically, the ability to address data exploitation or bias in a manner that satisfies customers.